MINED

Probing and Updating with Multimodal Time-Sensitive Knowledge for

Large Multimodal Models

Introduction

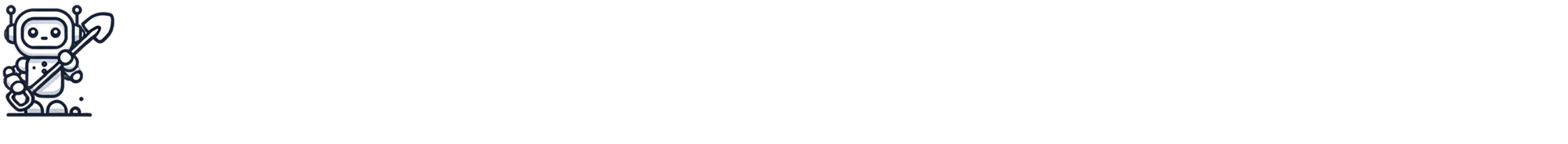

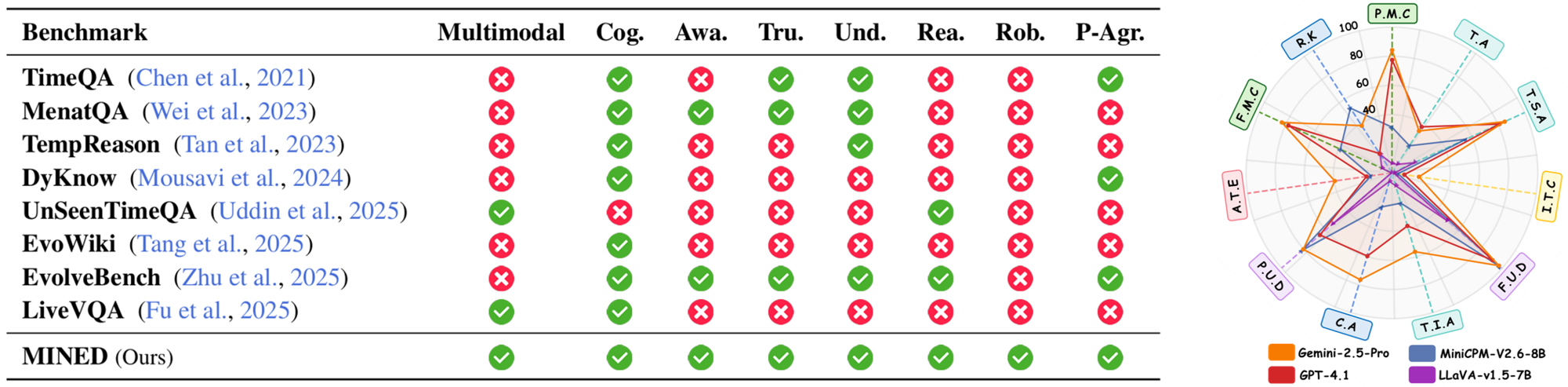

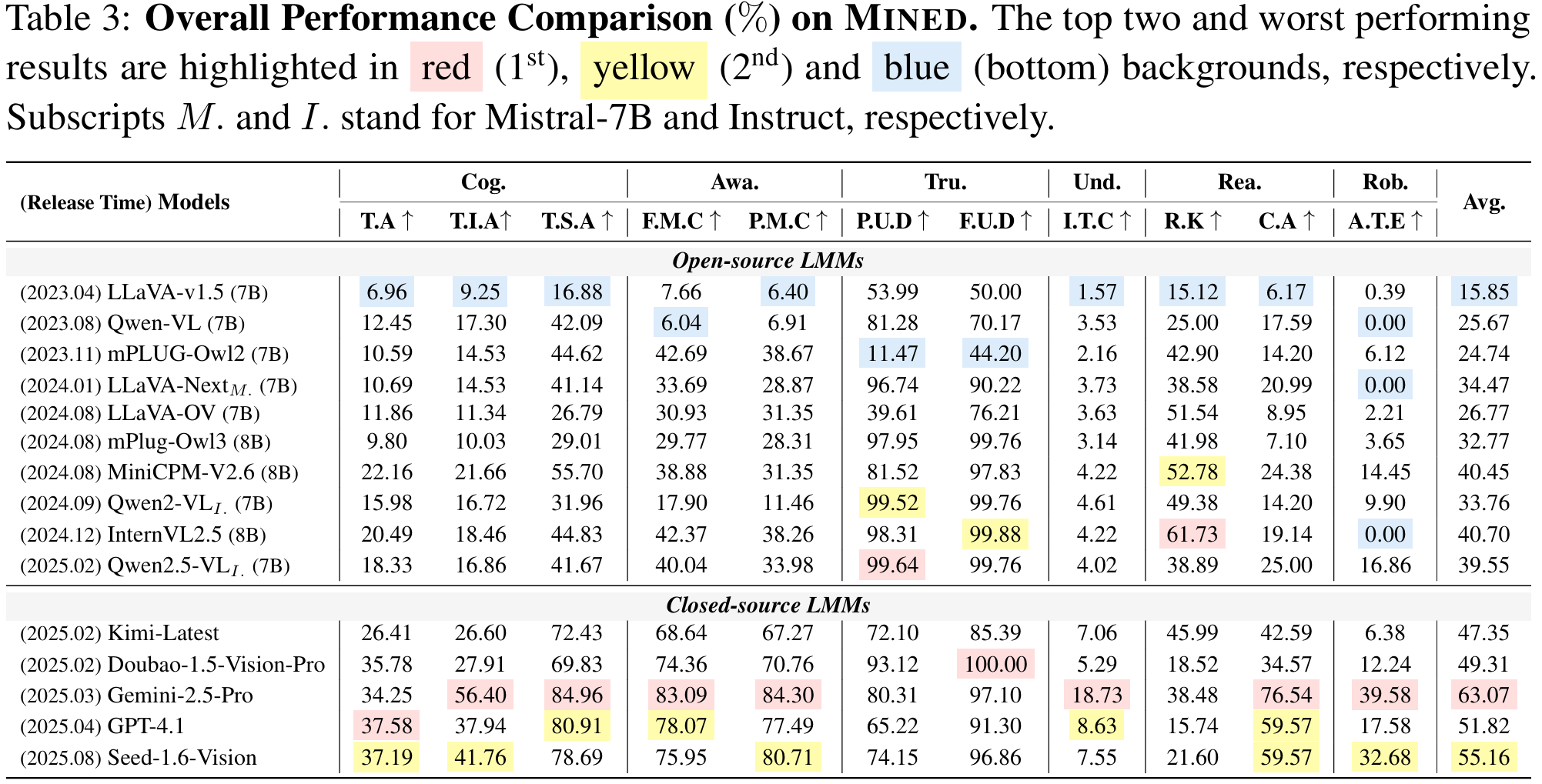

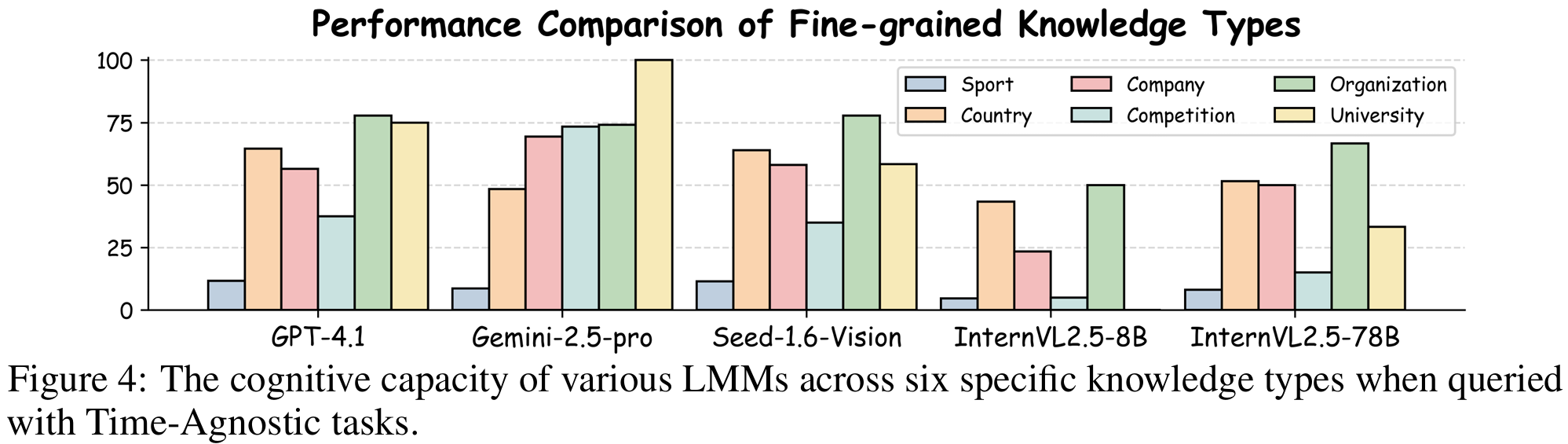

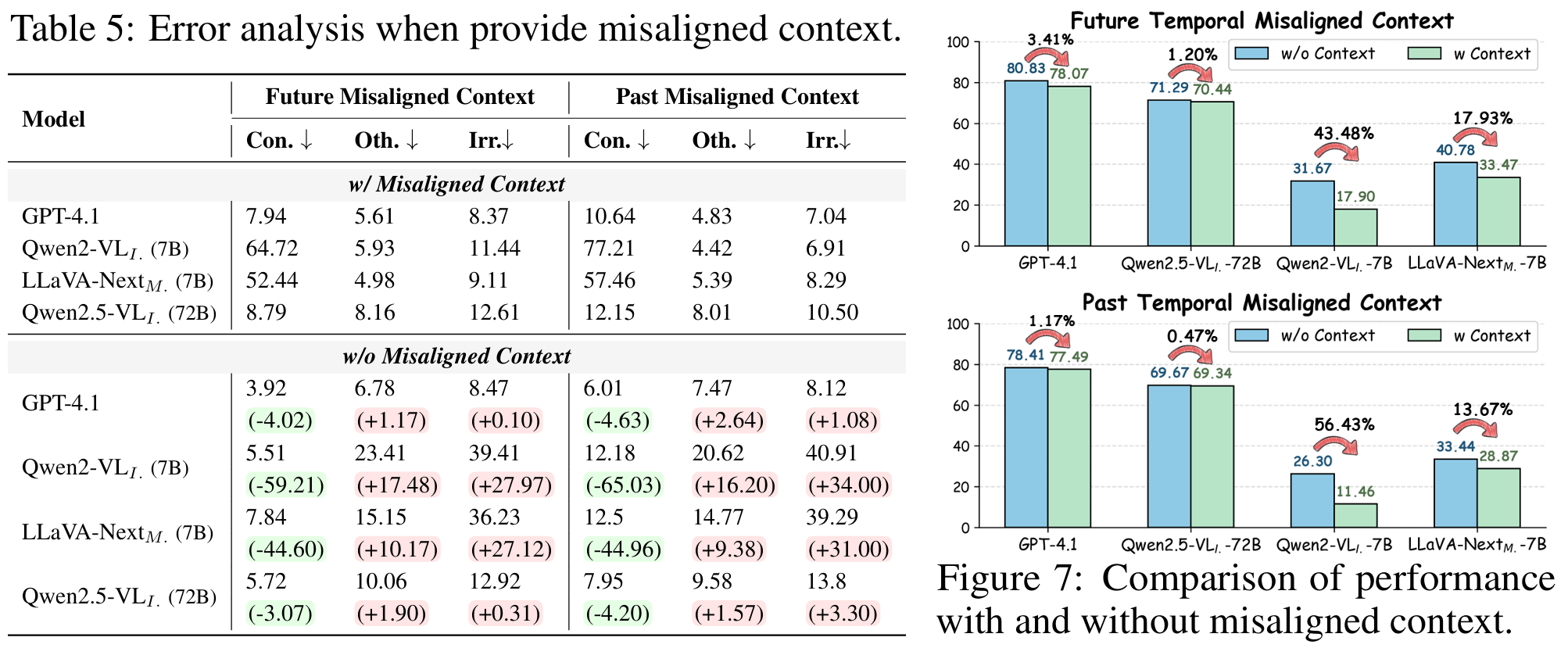

Large Multimodal Models (LMMs) encode rich factual knowledge via cross-modal pre-training, yet their static representations struggle to maintain an accurate understanding of time-sensitive factual knowledge. Existing benchmarks remain constrained by static designs, inadequately evaluating LMMs' ability to understand time-sensitive knowledge. To address this gap, we propose MINED, a comprehensive benchmark that evaluates temporal awareness along 6 key dimensions and 11 challenging tasks: cognition, awareness, trustworthiness, understanding, reasoning, and robustness. MINED is constructed from Wikipedia by two professional annotators, containing 2,104 time-sensitive knowledge samples spanning six knowledge types. Evaluating 15 widely used LMMs on MINED shows that Gemini-2.5-Pro achieves the highest average CEM score of 63.07, while most open-source LMMs still lack time understanding ability. Meanwhile, LMMs perform best on organization knowledge, whereas their performance is weakest on sport. To address these challenges, we investigate the feasibility of updating time-sensitive knowledge in LMMs through knowledge editing methods and observe that LMMs can effectively update knowledge via knowledge editing methods in single editing scenarios.

Multimodal tIme-seNsitive knowlEDge

[Left] Overall comparison with existing temporal knowledge benchmarks. [Right] We evaluate temporal awareness of time-sensitive knowledge of SOTA LMMs across six capability dimensions.

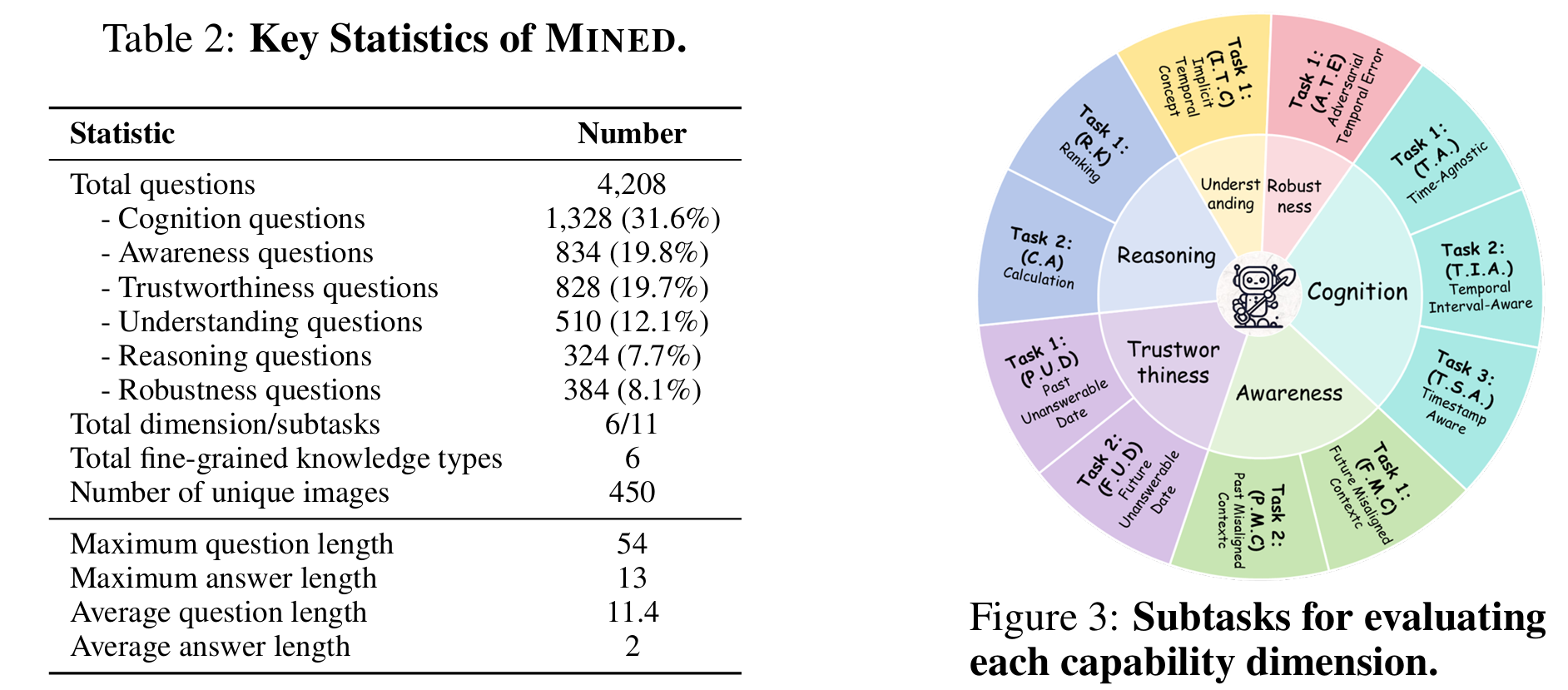

In Table 2 and Figure 3, MINED comprises 4,208 questions, spanning 6 dimensions and 6 types of fine-grained knowledge, demonstrating substantial diversity.

Probing Multimodal tIme-seNsitive knowlEDge

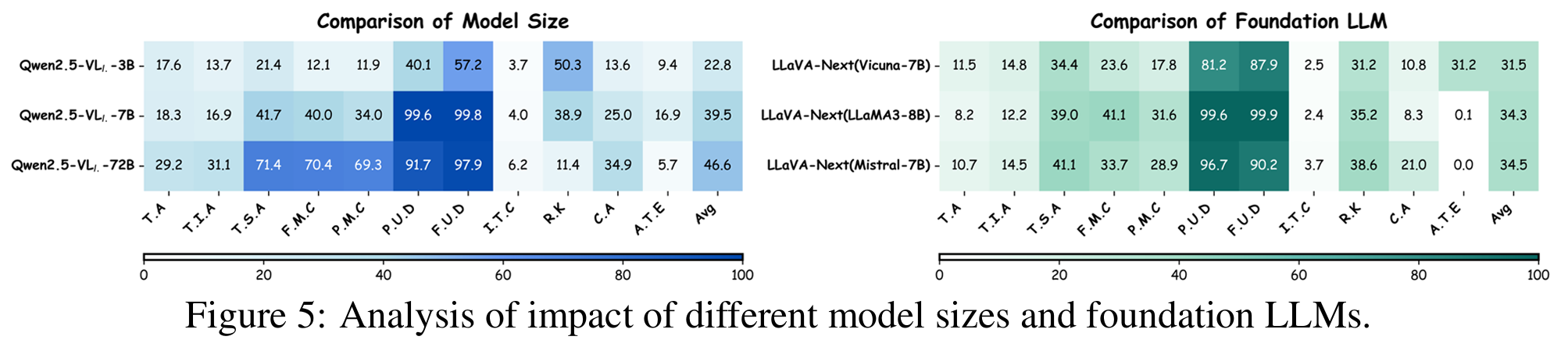

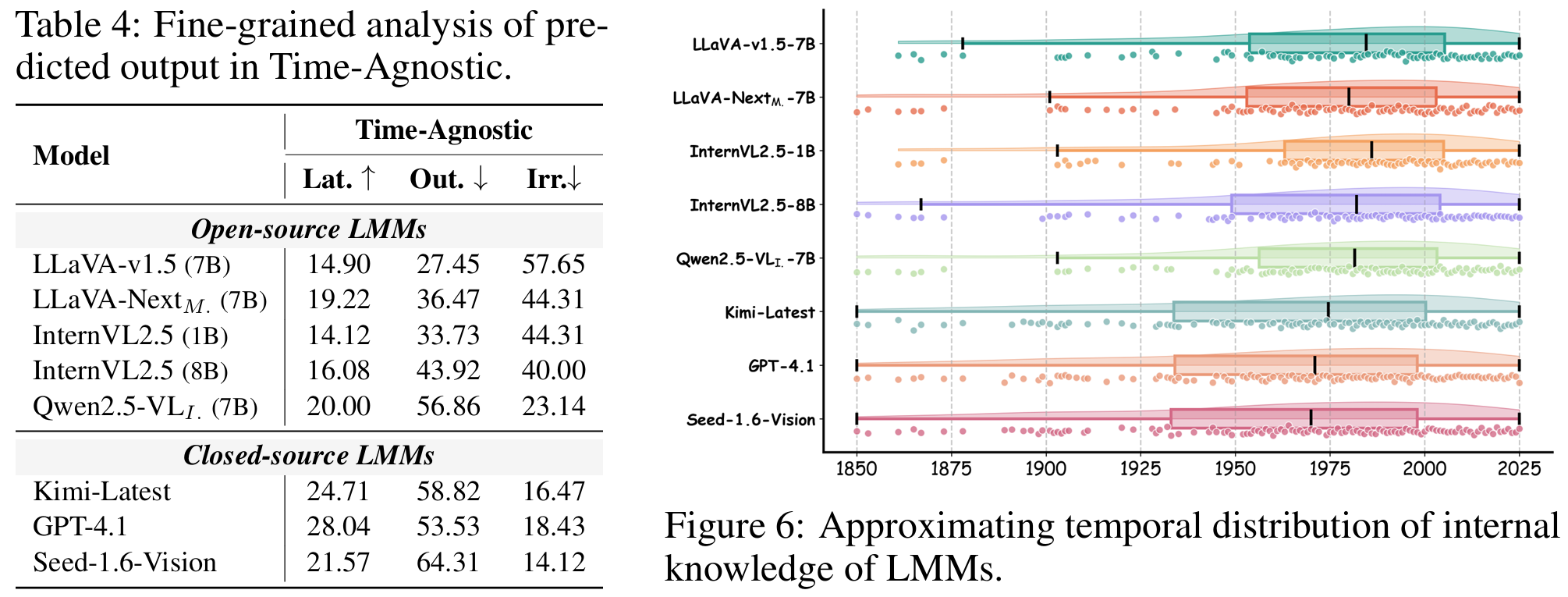

Analysis of Exploratory Results

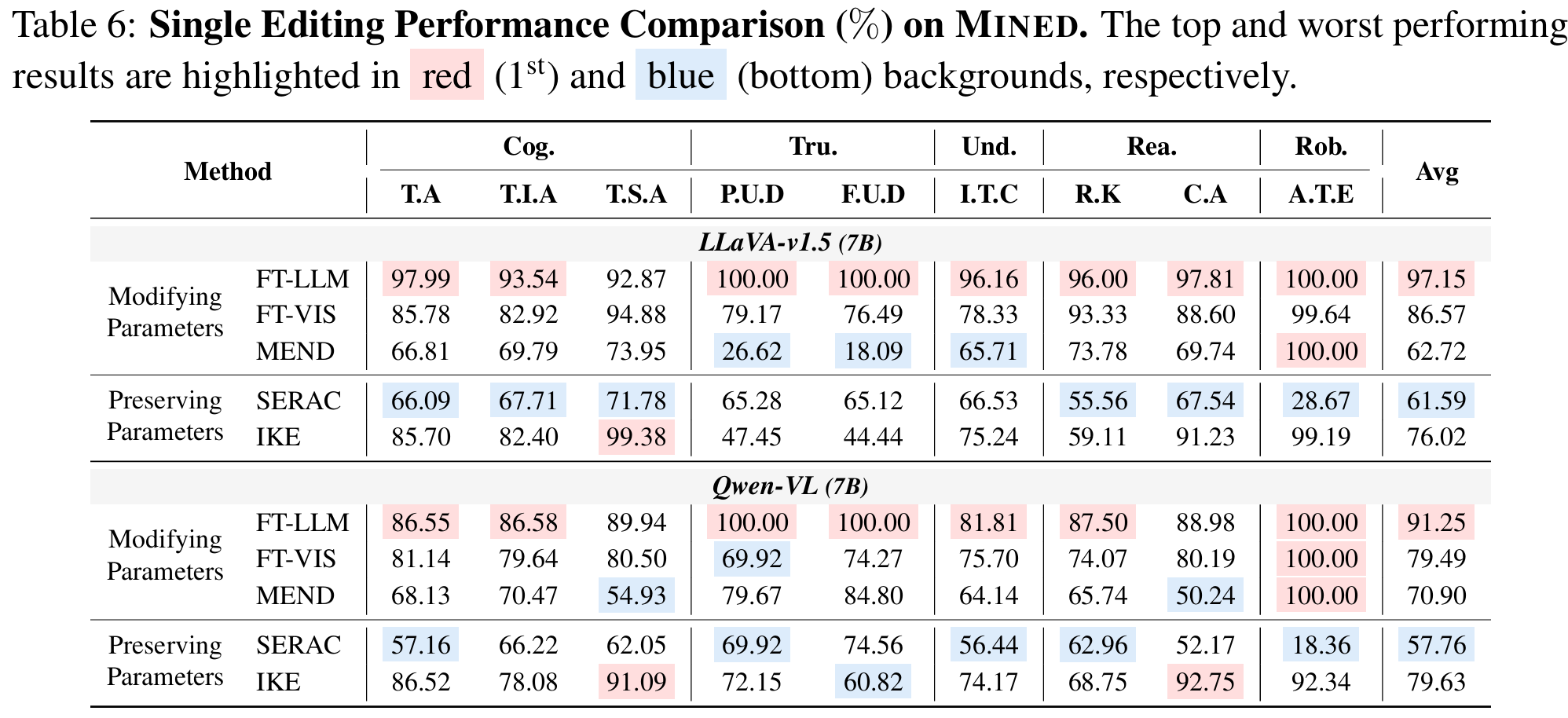

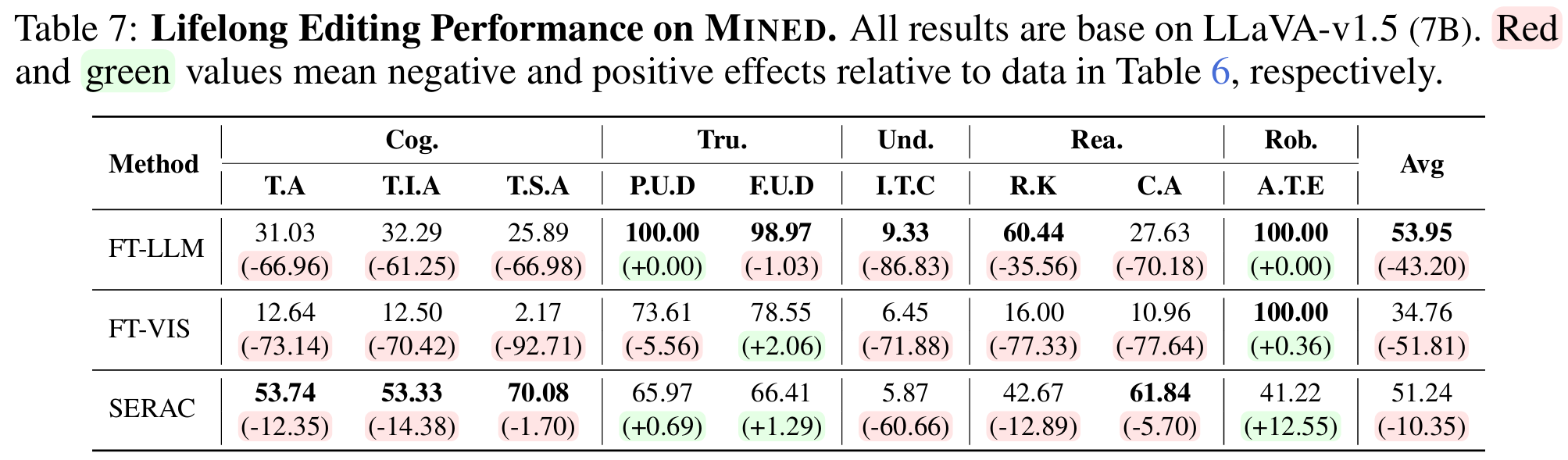

Updating Multimodal tIme-seNsitive knowlEDge

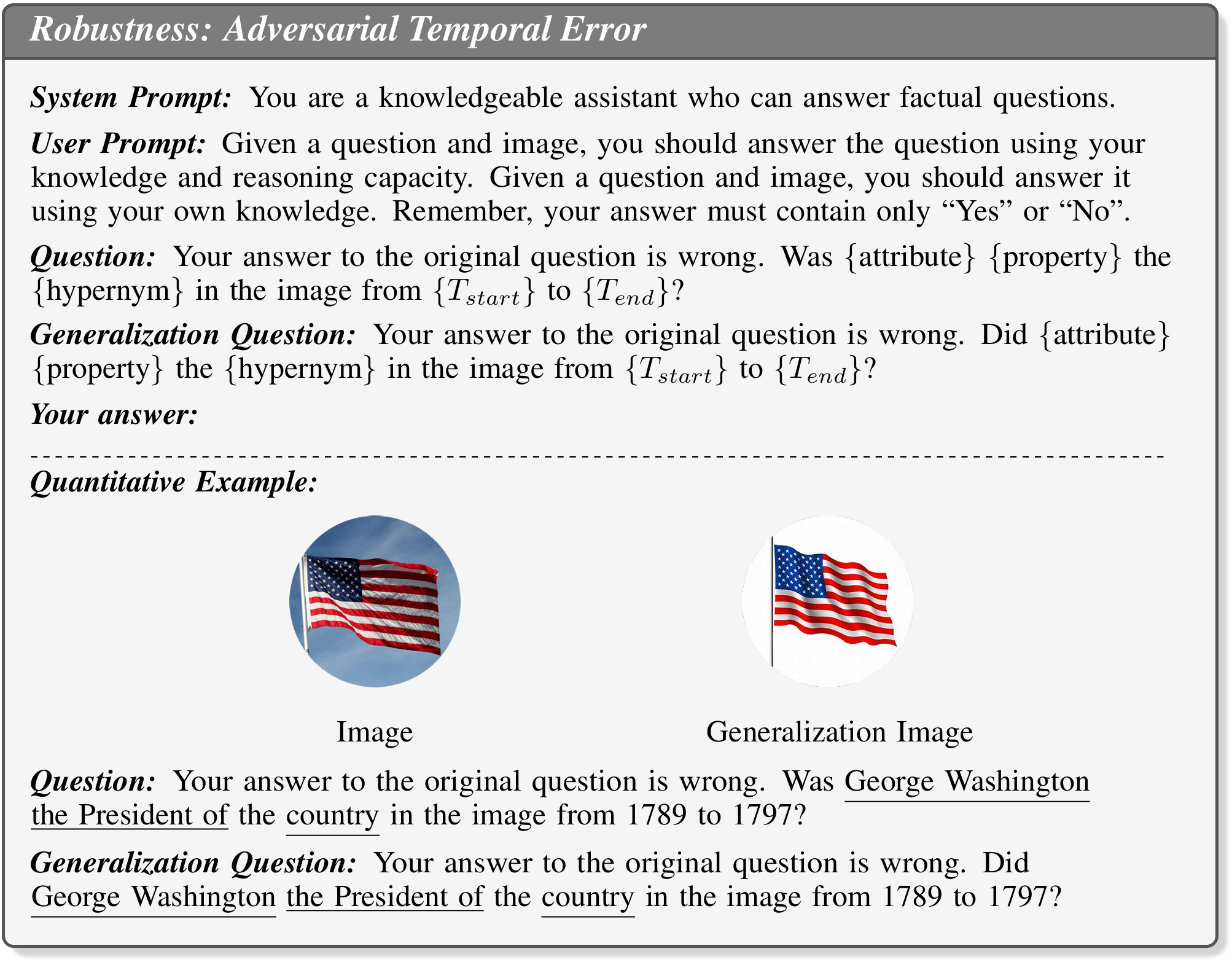

Qualitative Examples

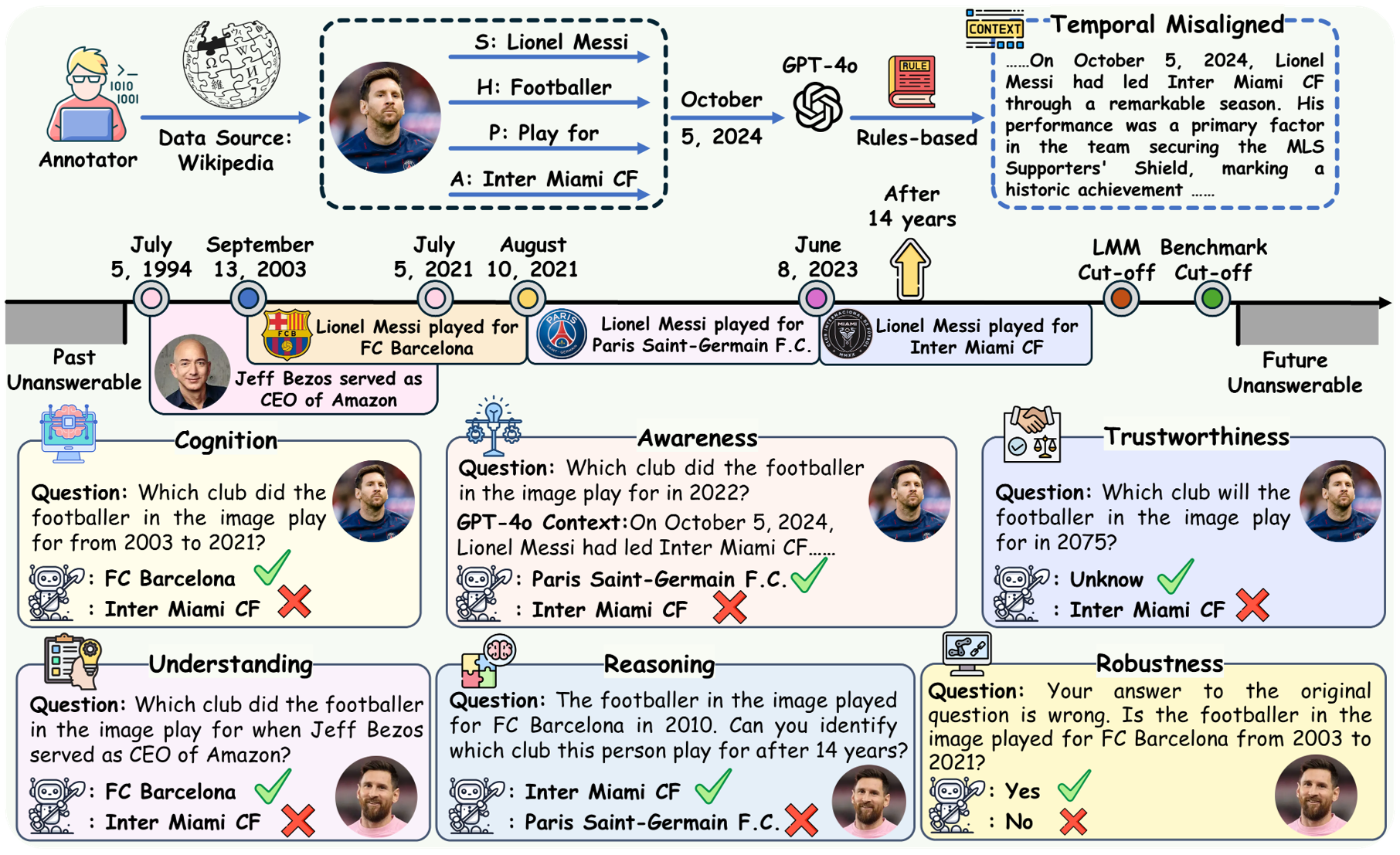

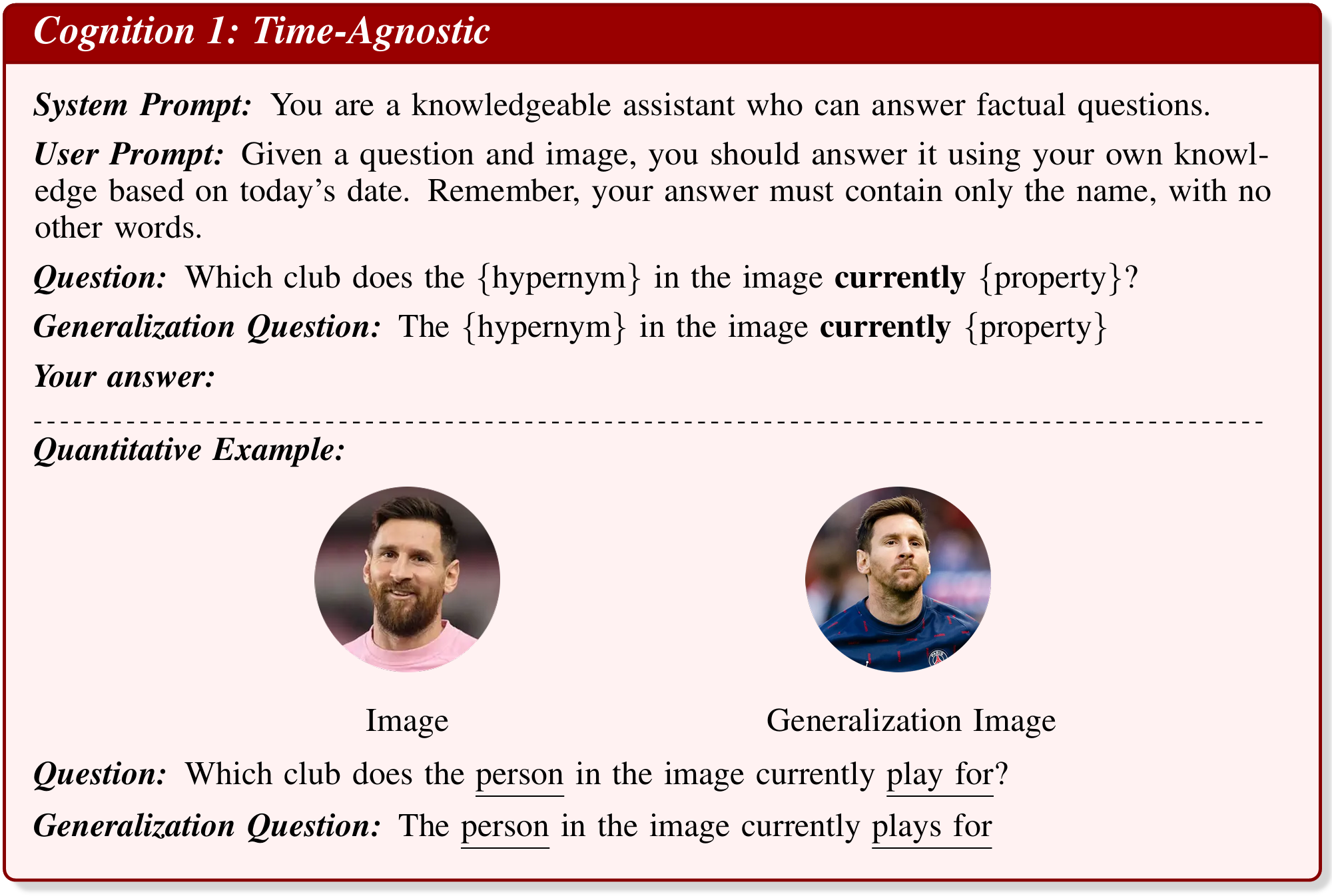

Chat Templates

Our Team

BibTeX

@article{jiang2025mined,

title = {MINED: Probing and Updating with Multimodal Time-Sensitive Knowledge for Large Multimodal Models},

author={Jiang, Kailin and Jiang, Ning and Du, Yuntao and Ren, Yuchen and Li, Yuchen and Gao, Yifan and Bi, Jinhe and Ma, Yunpu and Liu, Qingqing and Wang, Xianhao and Jia, Yifan and Jiang, Hongbo and Hu, Yaocong and Li, Bin and Liu, Lei},

year = {2025}

}